Evaluating the Web Search Accuracy of 16 AI Chatbots in Mid-2024

by Yuveganlife, July 10, 2024Introduction

This post is an update of our previous article, "5 Simple Questions to Test Generative AI Chatbots' Web Search Accuracy, Updated Version".Since the initial evaluation of 12 chatbots' search accuracy at the beginning of 2024, we've seen several new players emerged that worth evaluating their web search capabilities. Furthermore, there was interest to see how improving the search accuracy of existing models.

This updated post will present an evaluation of 16 chatbots, including both newcomers and improved versions of previously tested models, to provide a more up-to-date evaluation of AI search engines' performance in mid-2024.

Test candidate updates:

- Removed the LLMs with limited access from Perplexity and Phind. Only one LLM was selected from each website (selected Llama-3-70b on Huggingchat to compare with Llama-2-70b tested in previous test).

- Added ChatGPT (4o), Devv, Genspark, Kagi, Lepton, Meta AI, and Opera Aria

- Replaced original Question 1 with a new question to expand the variety of domains covered.

- Replaced the original Question 2 with a new question to test chatbots' capacity to accurately retrieve information from related web sources.

- Changed 'How many podcasts' back to 'How many recipe sites' for Question 3 to evaluate chatbots' ability to retrieve the latest updates from a website.

Evaluation Goals

Our evaluation goals are to assess the chatbots' ability to:- accurately understand user questions

- retrieve relevant, up-to-date, and accurate information from the web

- provide correct and proper responses through inference and reasoning

Criteria for the Test Candidates

We used the following criteria to select the AI-powered chatbot candidates for testing:- They had to be generative, meaning they could understand and generate responses in natural language

- They had to have a web search feature

- They had to be free and widely accessible, so that a broad range of users could test them and provide feedback

Selected AI-Powered Chatbots

Based on the above criteria, the following 16 chatbot models from 9 websites were selected for testing:- Bing Copilot (ChatGPT-4)

- ChatGPT (4o)

- Devv

- Genspark

- HuggingChat (Llama-3-70b-instruct)

- iAsk

- Kagi

- Komo

- Lepton

- Meta AI

- Opera Aria

- Perplexity (Basic)

- Phind (Instant)

- Pi

- Poe (Web-Search)

- You (Smart)

Evaluation Scope

By focusing our evaluation on 5 simple factual questions requiring inference, we can establish a controlled and reliable baseline for assessing chatbot performance and identifying areas for improvement.Yuveganlife.com, a new website launched in the summer of 2023, is relatively unknown compared to Eat Just, which has established itself over the past 10 years. However, despite its obscurity, Yuveganlife.com is well-indexed by search engines, while Eat Just's plant-based and cell-based food products are well-known around the world.

The Acron restaurant in Vancouver, established in 2012, received a Michelin star in 2022 and is well-known locally.

This combination offers a unique opportunity to evaluate the chatbot's ability to accurately search for and infer information based on newly updated web data, while also testing for potential errors in the LLM training process.

By asking for the current local date and time of a city, we can test whether each product can get the current local date and time without time zone issues, which can directly affect the quality of practical advice, such as travel schedules, appointment booking, or financial statements, that the chatbot provides to the user.

We don't provide any feedback to the chatbots no matter if the answer is expected or not when asking these 5 questions in the testing process.

The multiple LLMs from the same website (such Phind.com) were grouped using two different user accounts in two different browsers for the testing.

Limitation of Keyword-Based Search Engines

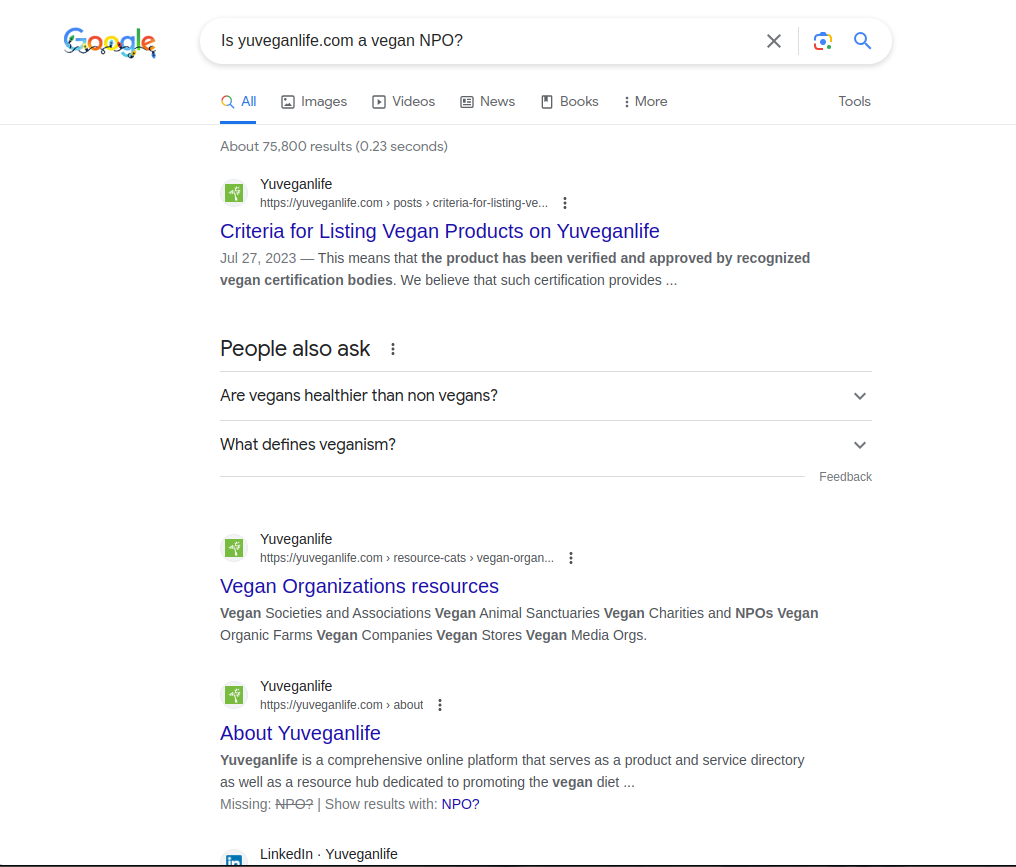

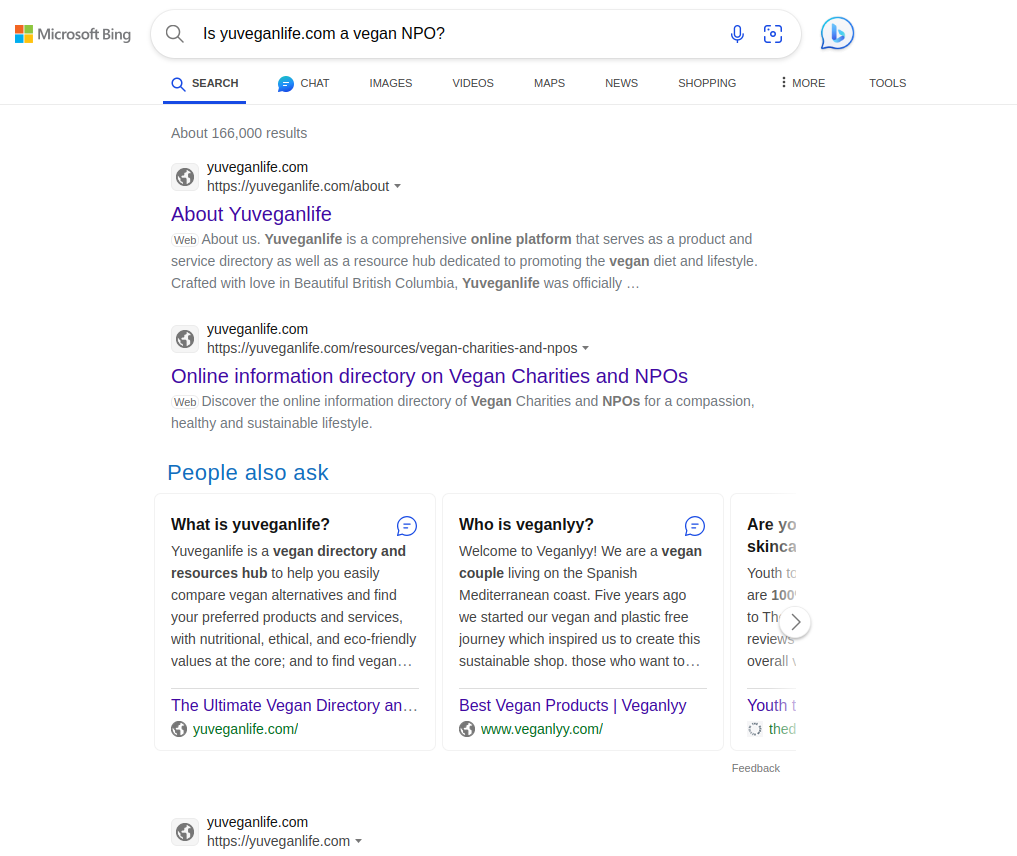

While these questions are simple enough and could be easily handled by a well-designed chatbot, they may not be able to be answered by a traditional keyword-based search engine.For example, when asking "Is Yuveganlife.com a vegan NPO?" to Google and Bing, both search engines simply returned some web pages that contained some of the keywords, but did not provide a clear answer to the question.

We can see from Google search cannot get relevant information:

We can see from Bing search cannot get relevant information too:

Test Execution Summary

Ask 5 simple questions to each chatbot:- Q1: Is Acorn Restaurant in Vancouver, Canada a fully vegan restaurant?

- Q2: Where is yuveganlife.com's headquarters?

- Q3: How many vegan recipe websites and blogs are listed on yuveganlife.com?

- Q4: What is the current local date and time in Vancouver, Canada?

- Q5: Does Eat Just manufacture Just Meat, a plant-based meat substitute made from pea protein?

| Chatbot | Q1: Yes/No | Q2: Where | Q3: How many | Q4: What date/time | Q5: Yes/No | Total Score |

|---|---|---|---|---|---|---|

| Bing Copilot | 3 | 2 | 1 | 3 | 2 | 11 |

| ChatGPT (4o) | 2 | 2 | 3 | 2 | 3 | 12 |

| Devv | 3 | 3 | 2 | 3 | 3 | 14 |

| Genspark | 3 | 3 | 3 | 0 | 3 | 12 |

| HuggingChat (Llama-3-70b) | 3 | 0 | 2 | 3 | 3 | 11 |

| iAsk | 2 | 0 | 1 | 0 | 0 / h | 3 |

| Kagi | 3 | 3 | 3 | 0 | 3 | 12 |

| Komo | 0 | 0 / h | 1 | 0 | 0 / h | 1 |

| Lepton | 3 | 0 | 1 | 0 | 0 / h | 4 |

| Meta AI | 3 | 0 | 1 | 2 | 3 | 9 |

| Opera Aira | 0 | 0 / h | 2 | 3 | 0 / h | 5 |

| Perplexity (Basic) | 3 | 3 | 1 | 0 | 3 | 10 |

| Phind (Instant) | 0 | 0 | 1 | 3 | 3 | 7 |

| Pi | 3 | 0 / h | 1 | 3 | 2 | 9 |

| Poe (Web-Search) | 3 | 3 | 1 | 3 | 0 / h | 10 |

| You (Smart) | 3 | 0 / h | 2 | 2 | 0 / h | 7 |

Q1: Is Acorn Restaurant in Vancouver, Canada a fully vegan restaurant?

There is an explicit statement on the FAQ page of The Acorn's website that mentions it is not a fully vegan restaurant. However, a few popular vegan restaurant review pages mistakenly show it as a vegan restaurant.The candidate will receive 2 points if it provides an answer that is not the most appropriate response, such as "Yes, Acron is a fully vegetarian restaurant."

The answers provided by AI chatbots were as follows:

- Bing Copilot: Correct. Score: 3 ( screenshot )

- ChatGPT (4o): Mostly Correct. Score: 2 ( screenshot )

- devv: Correct. Score: 3 ( screenshot )

- Genspark: Correct. Score: 3 ( screenshot )

- HuggingChat (Llama-3-70b): Correct. Score: 3 ( screenshot )

- iAsk: Mostly Correct. Score: 2 ( screenshot )

- Kagi: Correct. Score: 3 ( screenshot )

- Komo: Wrong. Score: 0 ( screenshot )

- Lepton: Correct. Score: 3 ( screenshot )

- Meta AI: Correct. Score: 3 ( screenshot )

- Opera Aira: Wrong. Score: 0 ( screenshot )

- Perplexity (Basic): Correct. Score: 3 ( screenshot )

- Phind (Instant): Cannot answer. Score: 0 ( screenshot )

- Pi: Correct. Score: 3 ( screenshot )

- Poe (Web-Search): Correct. Score: 3 ( screenshot )

- You (Smart): Correct. Score: 3 ( screenshot )

Q2: Where is yuveganlife.com's headquarters?

Although the website does not explicitly provide this information, the About page mentions that "... in Beautiful British Columbia, Yuveganlife was officially launched in the summer of 2023", which provides a clue to infer its established year which was 2023 in BC, Canada. The headquarters information can easily be found on Yuveganlife's Linkedin About page: "Primary Headquarters: Greater Vancouver, Canada", which is the most relevant location.The candidate will receive 2 points if it provides an answer that is not the most appropriate response such as "British Columbia".

The answers provided by AI chatbots were as follows:

- Bing Copilot: Mostly Correct. Score: 2 ( screenshot )

- ChatGPT (4o): Mostly Correct. Score: 2 ( screenshot )

- devv: Correct. Score: 3 ( screenshot )

- Genspark: Correct. Score: 3 ( screenshot )

- HuggingChat (Llama-3-70b): Cannot answer. Score: 0 ( screenshot )

- iAsk: Cannot answer. Score: 0 ( screenshot )

- Kagi: Correct. Score: 3 ( screenshot )

- Komo: Wrong due to hallucination. Score: 0 ( screenshot )

- Lepton: Cannot answer. Score: 0 ( screenshot )

- Meta AI: Cannot answer. Score: 0 ( screenshot )

- Opera Aira: Wrong due to hallucination. Score: 0 ( screenshot )

- Perplexity (Basic): Correct. Score: 3 ( screenshot )

- Phind (Instant): Cannot answer. Score: 0 ( screenshot )

- Pi: Wrong due to hallucination. Score: 0 ( screenshot )

- Poe (Web-Search): Correct. Score: 3 ( screenshot )

- You (Smart): Wrong due to hallucination. Score: 0 ( screenshot )

Q3: How many vegan recipe websites and blogs are listed on yuveganlife.com?

There were five website update posts which mentioned the number of recipe sites/blogs included. The first update mentioned 116 sites/blogs, the second mentioned 180+, the third mentioned 225+, the fourth mentioned 230+, the fifth mentioned 260+.The candidate will receive three points for using information from the fifth update (260+), two points for using information from the fourth update (230+), one point for using information from the third update or earlier updates, and zero points if they cannot provide an accurate answer.

The answers provided by AI chatbots were as follows:

- Bing Copilot: Least Correct. Score: 1 ( screenshot )

- ChatGPT (4o): Correct. Score: 3 ( screenshot )

- devv: Mostly Correct. Score: 2 ( screenshot )

- Genspark: Correct (Note that the Direct Answer By AI was the least correct answer). Score: 3 ( screenshot )

- HuggingChat (Llama-3-70b): Mostly Correct. Score: 2 ( screenshot )

- iAsk: Least Correct. Score: 1 ( screenshot )

- Kagi: Correct. Score: 3 ( screenshot )

- Komo: Least Correct. Score: 1 ( screenshot )

- Lepton: Least Correct. Score: 1 ( screenshot )

- Meta AI: Least Correct. Score: 1 ( screenshot )

- Opera Aira: Mostly Correct. Score: 2 ( screenshot )

- Perplexity (Basic): Least Correct. Score: 1 ( screenshot )

- Phind (Instant): Least Correct answer. Score: 1 ( screenshot )

- Pi: Least Correct. Score: 1 ( screenshot )

- Poe (Web-Search): Least Correct. Score: 1 ( screenshot )

- You (Smart): Mostly Correct. Score: 2 ( screenshot )

Q4: What is the current local date and time in Vancouver, Canada?

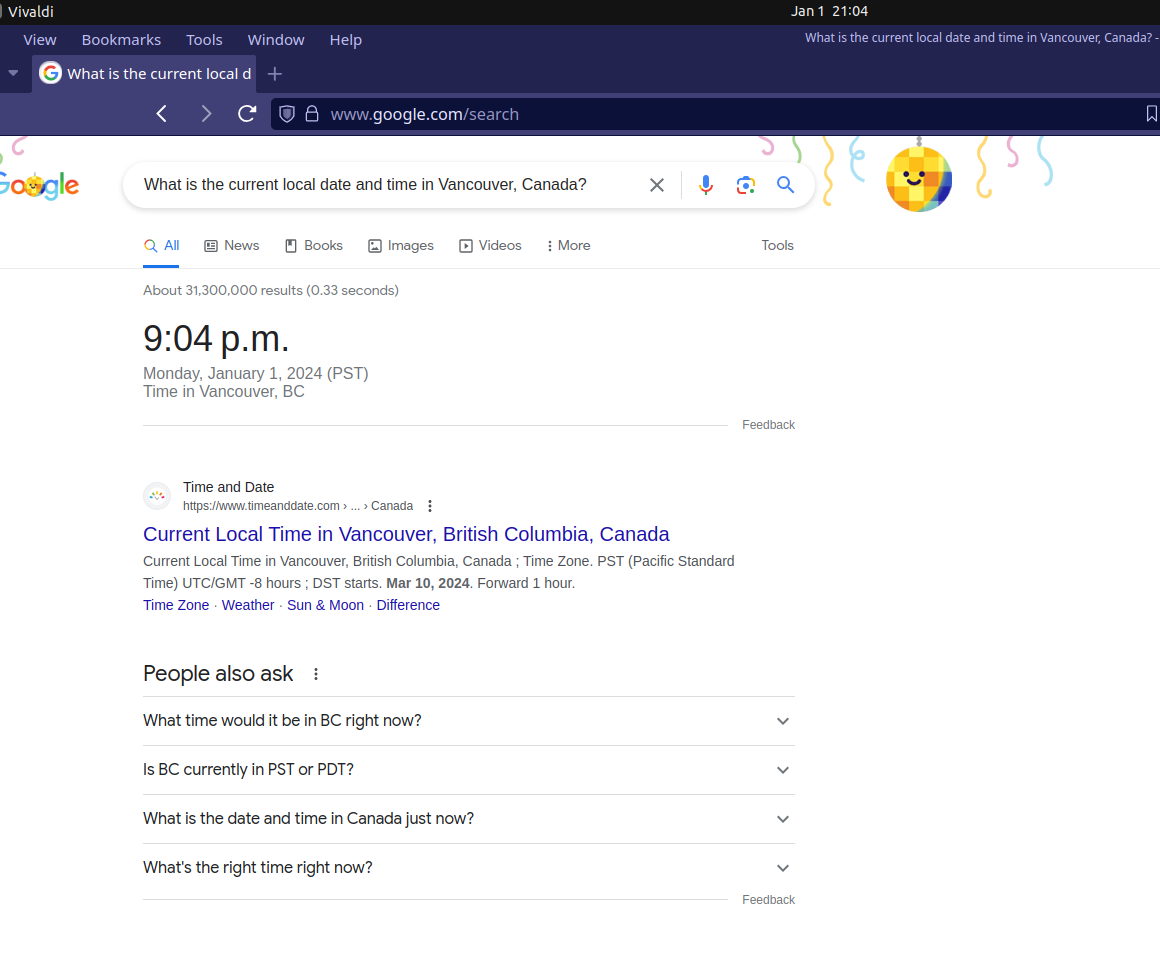

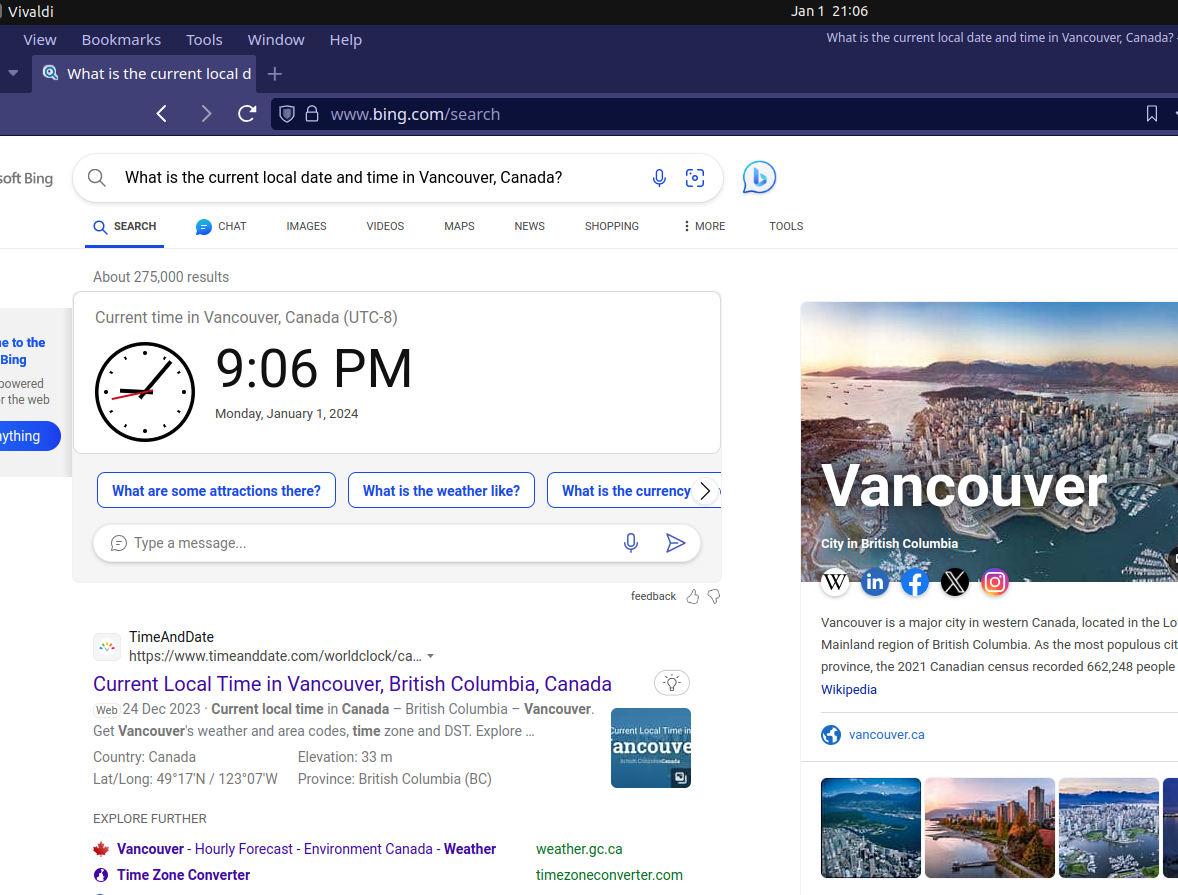

Everyone is confident of getting the correct current local date and time by asking Google or Bing for a long time.

But how accurately can chatbot search engines deliver this information to the user? Although this is a simple task, it must be implemented correctly. Otherwise, it may cause potential errors when was used for other tasks such as travel scheduling, financial statements.

Many chatbots use the local date and time of the host server as a baseline reference. This worked fine when the server was located in a fixed data center, but will result in an inaccurate value when the software is deployed in the cloud of multiple data centers located in different time zones. It won't be easy to test in the daily office hours for the edge cases.

Other chatbots use the information from the internet to generate the current local date and time. Generally speaking, these LLMs get more recent information from sources such as RAG (Retrieval-Augmented Generation) or their own proprietary retrieving component, or from other search engines such as Google and Bing to generate answers. The frequency and efficiency of updating the retrieval database can vary among different systems, which can cause AI search engines to return outdated information regarding the current date and time.

These tests were conducted after 9PM Pacific Time zone to see if these chatbots can handle the edge case of time zones correctly.

The candidate will receive 3 points if it can provide the correct local date and time in Vancouver, Canada, 2 points for correctly stating the date and providing a local time within a 20-minute error margin. and zero points if they cannot answer or totally wrong.

Each testing session was based on PST (Pacific Standard Time), with the local date and time displayed at the top of each screenshot.

The answers provided by AI chatbots were as follows:

- Bing Copilot: Correct. Score: 3 ( screenshot )

- ChatGPT (4o): Mostly Correct. Score: 2 ( screenshot )

- devv: Correct. Score: 3 ( screenshot )

- Genspark: Wrong. Score: 0 ( screenshot )

- HuggingChat (Llama-3-70b): Correct. Score: 3 ( screenshot )

- iAsk: Wrong. Score: 0 ( screenshot )

- Kagi: Wrong. Score: 0 ( screenshot )

- Komo: Cannot answer. Score: 0 ( screenshot )

- Lepton: Wrong. Score: 0 ( screenshot )

- Meta AI: Mostly Correct. Score: 2 ( screenshot )

- Opera Aira: Correct. Score: 3 ( screenshot )

- Perplexity: Wrong. Score: 0 ( screenshot )

- Phind (Instant): Correct. Score: 3 ( screenshot )

- Pi: Correct. Score: 3 ( screenshot )

- Poe (Web-Search): Correct. Score: 3 ( screenshot )

- You (Smart): Correct, but the date and time were not in PST, instead UTC. Score: 2 ( screenshot )

Q5: Does Eat Just manufacture Just Meat, a plant-based meat substitute made from pea protein?

"Eat Just was founded in 2011 by Josh Tetrick and Josh Balk. In July 2017, it started selling a substitute for scrambled eggs called Just Egg that is made from mung beans. It released a frozen version in January 2020. In December 2020, the Government of Singapore approved cultivated meat created by Eat Just, branded as GOOD Meat. A restaurant in Singapore called 1880 became the first place to sell Eat Just's cultured meat." -- source from Wikipedia." Beyond Meat, Inc. is a Los Angeles–based producer of plant-based meat substitutes founded in 2009 by Ethan Brown. The company's initial products were launched in the United States in 2012... The burgers are made from pea protein isolates... " -- source from Wikipedia.

Both Eat Just and Beyond Meat's revolutionary food products were reported widely around the world and should be included in each LLMs' training dataset.

This question was designed to ask a yes/no question about an imagined product brand name, Just Meat, which was mixed with Eat Just's cultivated meat product and Beyond Meat's plant-based product, in order to test the LLM hallucination issue.

This question and answer has been indexed by Google, but was ranked very low when conduct keyword search ( Screenshot ), so the indexing should not affect the new testing results. The candidate will receive three points if they correctly identify that no such information exists, and zero points if they provide incorrect information based on a hallucination.

- Bing Copilot: Mostly Correct. Cultured meat is not same as planted-based meat. Score: 2 ( screenshot )

- ChatGPT (4o): Correct. Score: 3 ( screenshot )

- devv: Correct. Score: 3 ( screenshot )

- Genspark: Correct. Score: 3 ( screenshot )

- HuggingChat (Llama-3-70b): Correct. Score: 3 ( screenshot )

- iAsk: Wrong due to hallucination. Score: 0 ( screenshot )

- Kagi: Correct. Score: 3 ( screenshot )

- Komo: Wrong due to hallucination. Score: 0 ( screenshot )

- Lepton: Wrong due to hallucination. Score: 0 ( screenshot )

- Meta AI: Correct. Score: 3 ( screenshot )

- Opera Aira: Wrong due to hallucination. Score: 0 ( screenshot )

- Perplexity (Basic): Correct. Score: 3 ( screenshot )

- Phind (Instant): Correct. Score: 3 ( screenshot )

- Pi: Mostly Correct. Cultured meat is not same as planted-based meat. Score: 2 ( screenshot )

- Poe (Web-Search): Wrong due to hallucination. Score: 0 ( screenshot )

- You (Smart): Wrong due to hallucination. Score: 0 ( screenshot )

Conclusion

Based on the results of our latest testing, we found that this free chatbot: "Devv" was the most effective in providing accurate responses to our 5 updated test questions, demonstrating its ability to understand user queries and retrieve relevant and reliable information.There are additional observations from the updated test result:

- The top two chatbots were ranked based on their scores from this round of testing: Devv with 14/15, followed by ChatGPT (4o), Genspark and Kagi, scoring 12/15.

- The overall accuracy of 16 AI chatbots in web search was 57.1%, an improvement from 49.7% in the previous testing of 12 chatbots.

- The July 2024 test results show that 50% of chatbots scored over 10/15, compared to only 33.33% in January 2024.

- Among the existing chatbots tested in January 2024, Poe (Web search) and HuggingChat (Llama-3-70b) have shown dramatic improvements in web search accuracy.

Disclaimer

This test study was designed and conducted independently by Yuveganlife.com, with no affiliation or involvement with any other organizations.The test results for each chatbot are not indicative of each product's accuracy for other search performance, as they were only isolated to these five updated test questions.